Connecting to AWS S3 with R

Connecting AWS S3 to R is easy thanks to the aws.s3 package. In this tutorial, we’ll see how to

- Set up credentials to connect R to S3

- Authenticate with aws.s3

- Read and write data from/to S3

1. Set Up Credentials To Connect R To S3

- If you haven’t done so already, you’ll need to create an AWS account.

- Sign in to the management console. Search for and pull up the S3 homepage.

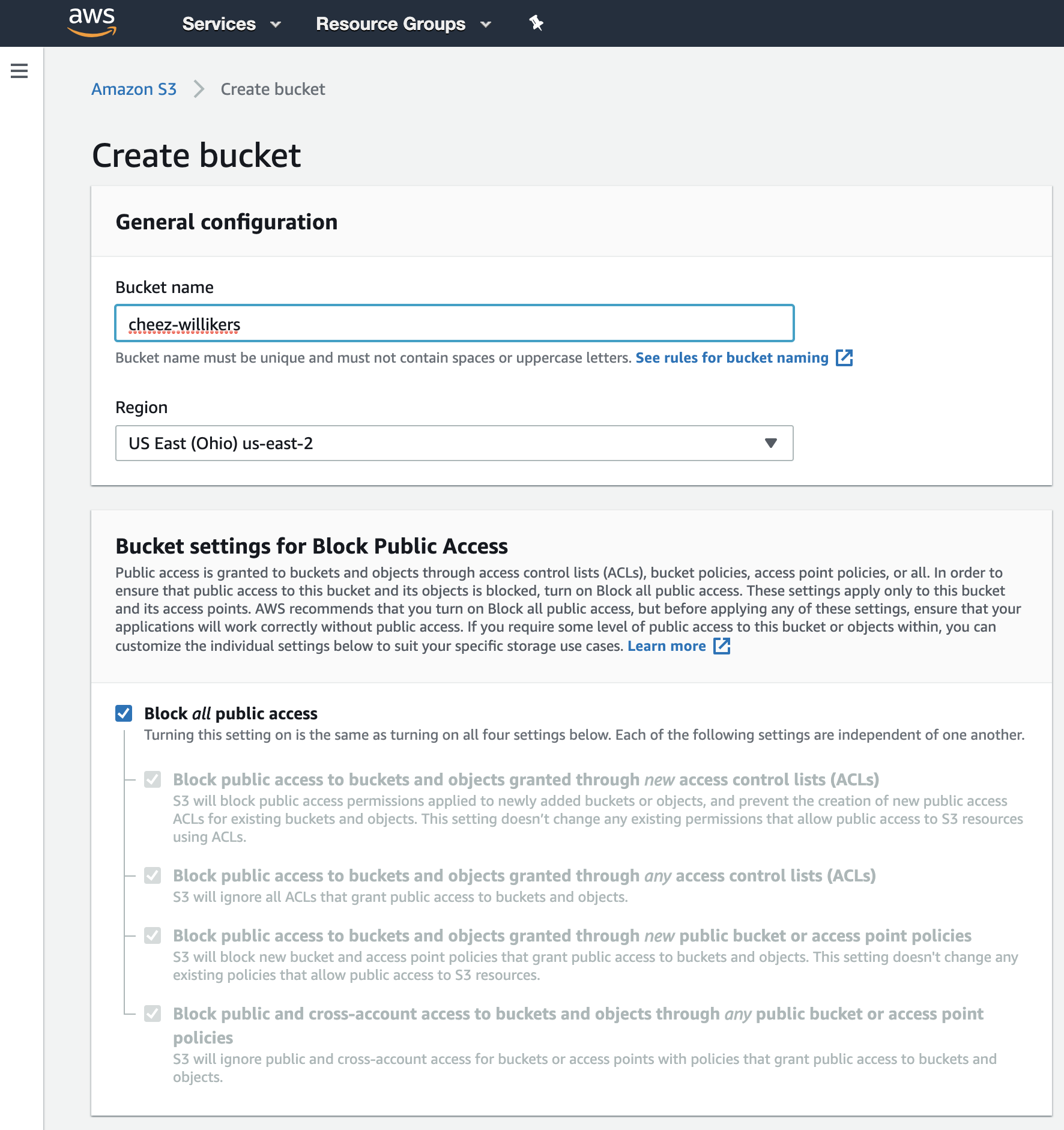

- Next, create a bucket. Give it a unique name, choose a region close to you, and keep the other default settings in place (or change them as you see fit). I’ll call my bucket cheez-willikers.

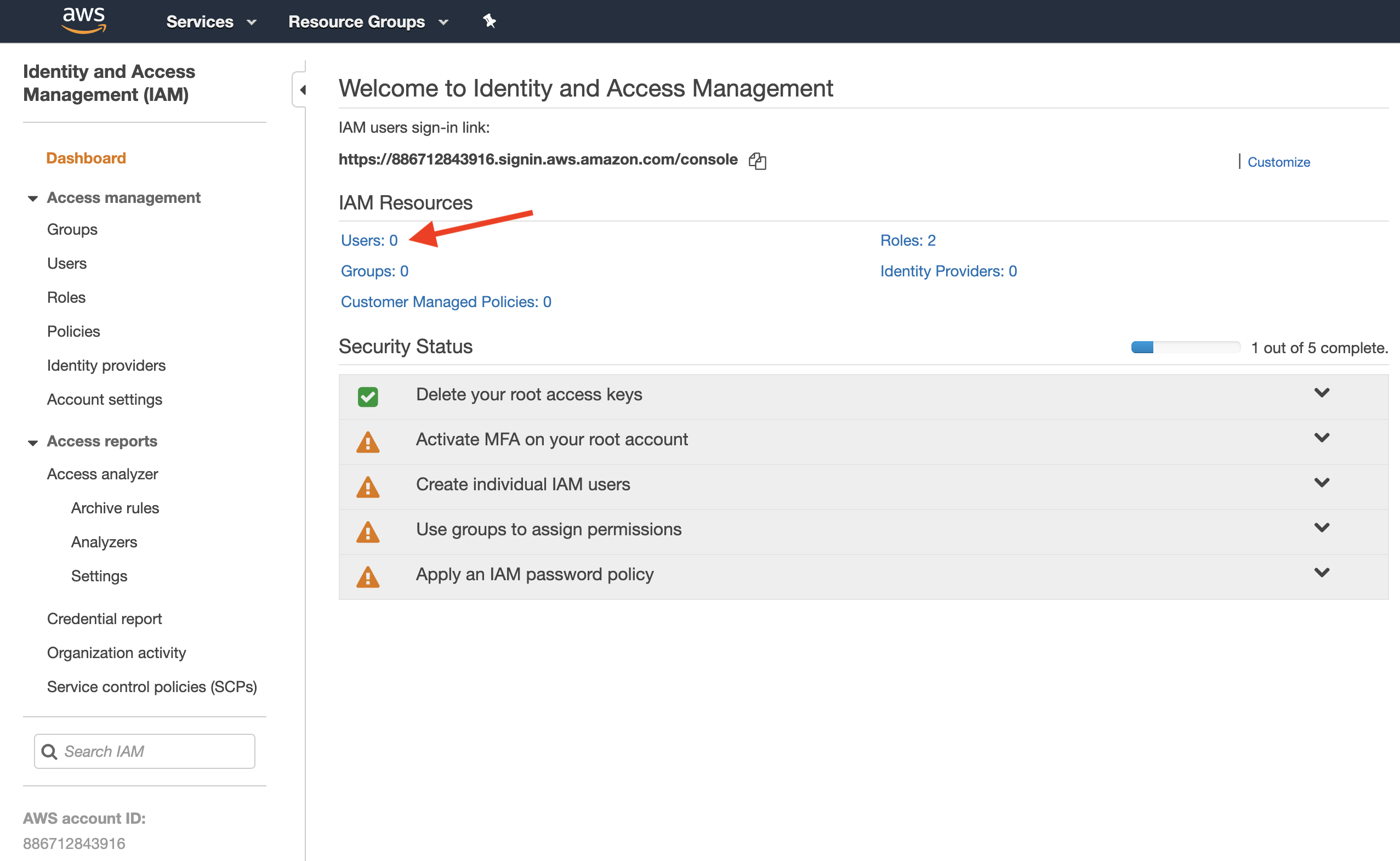

- Now we need to create a special user with S3 read and write permissions. Navigate to Identity and Access Management (IAM) and click on users.

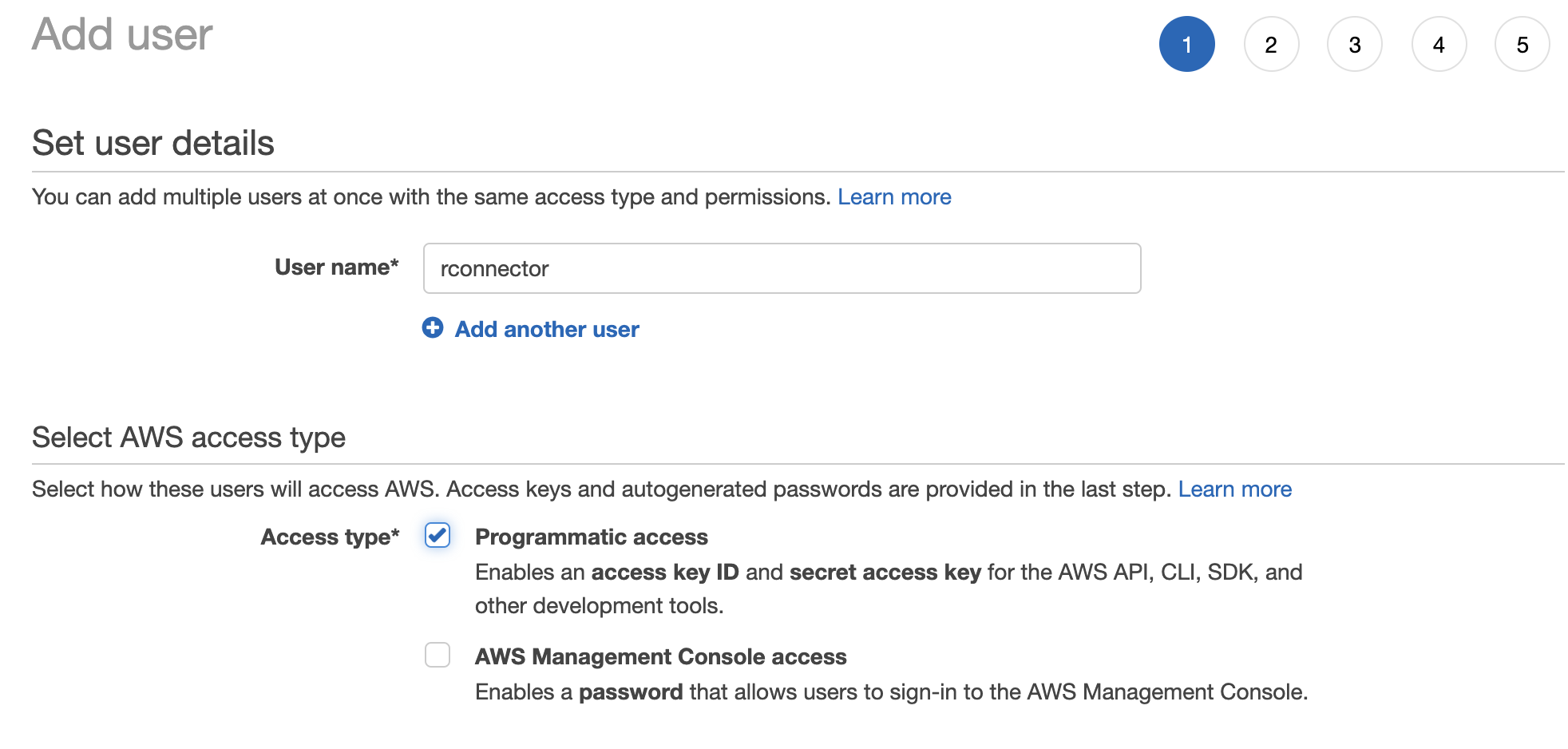

- Give your user a name (like rconnector) and give the user programmatic access.

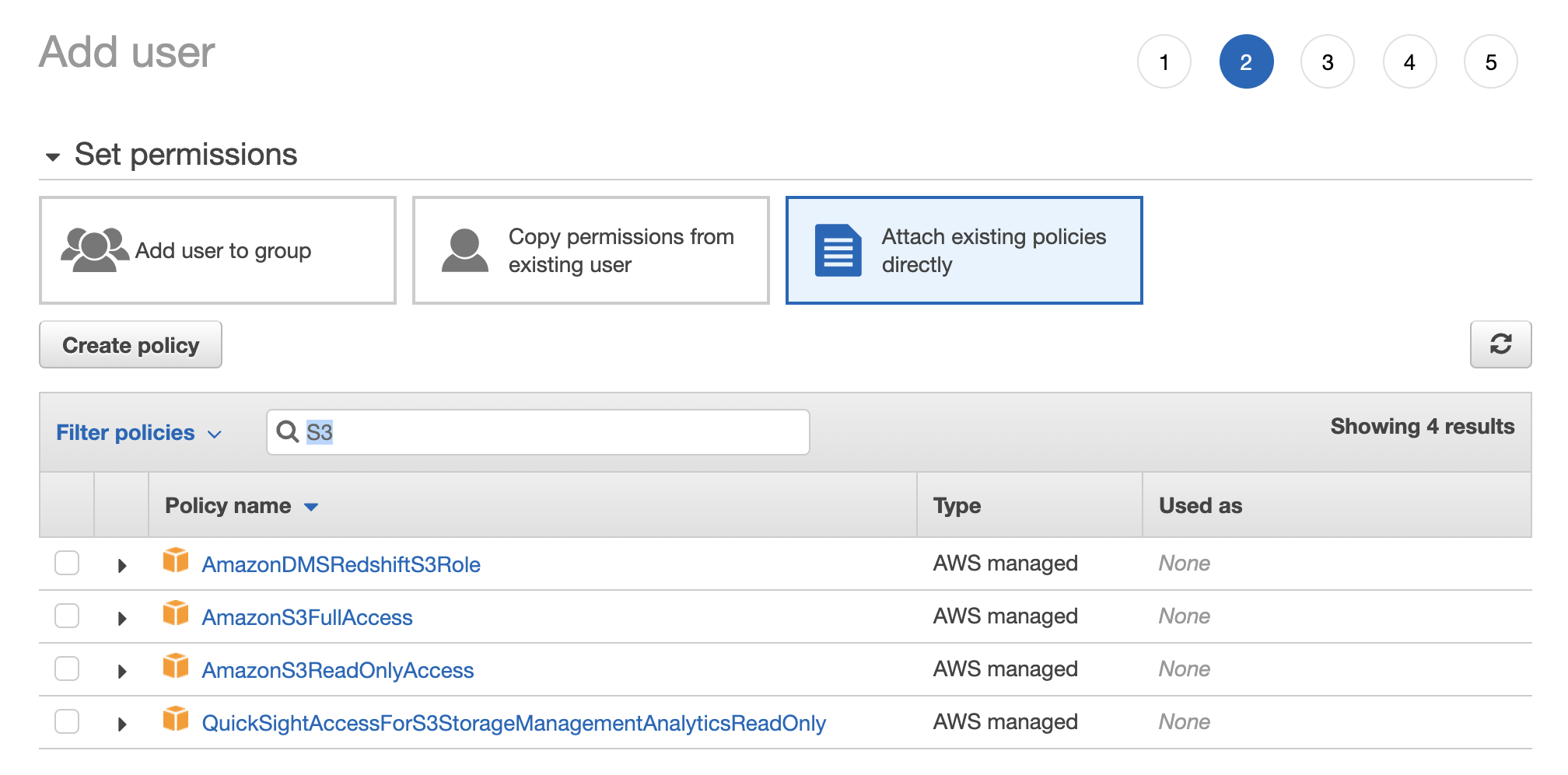

- Now we need to give the user permission to read and write data to S3. We have two options here. The easy option is to give the user full access to S3, meaning the user can read and write from/to all S3 buckets, and even create new buckets, delete buckets, and change permissions to buckets. To do this, select Attach Existing Policies Directly > search for S3 > check the box next to AmazonS3FullAccess.

The harder, but better approach is to give the user access to read and write files only for the bucket we just created. To do this, select Attach Existing Policies Directly and then work through the Visual Policy Editor.

- Service: S3

- Actions: Everything besides Permissions Management

- Resources: add the following Amazon Resource Names (ARNs):

- accesspoint: Any

- bucket: cheez-willikers

- job: Any

- object: cheez-willikers (bucket) | Any (objects)

- Review the policy and give it a name.

- Attach the policy to the user.

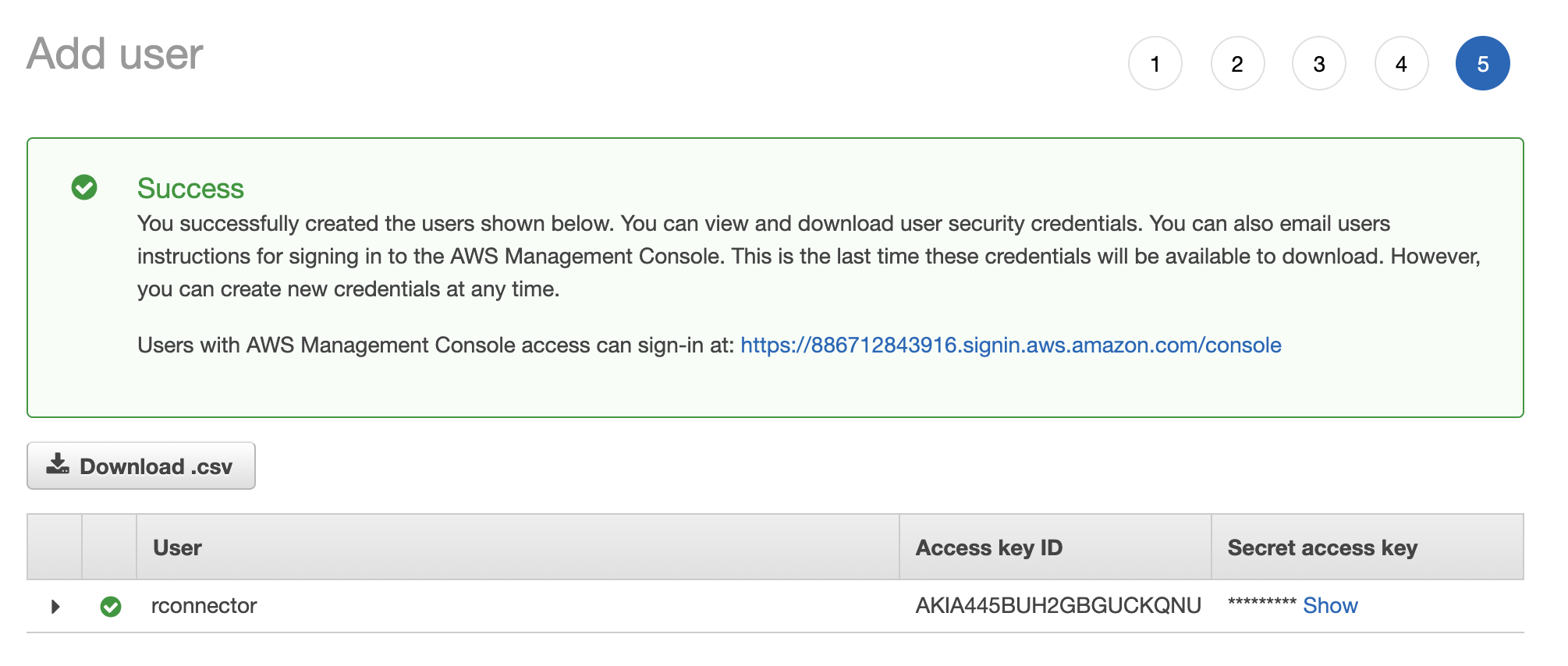

- Skip through the remaining steps to create the user until you get a Success message with user credentials. Download the user credentials and store them somewhere safe because this is your only opportunity to see the Secret Access Key from the AWS console. If you lose it, you can’t recover it (but you can create a new key). These credentials are what we’ll use to authenticate from R, to get access to our S3 bucket.

2. Authenticate With aws.s3

Obviously, if you haven’t done so already, you’ll need to install the aws.s3 package.

install.packages("aws.s3")

In order to connect to S3, you need to authenticate. You can do this in one of two ways. Perhaps the easiest method is to set environment variables like this

Sys.setenv(

"AWS_ACCESS_KEY_ID" = "mykey",

"AWS_SECRET_ACCESS_KEY" = "mysecretkey",

"AWS_DEFAULT_REGION" = "us-east-2"

)

Note that AWS_DEFAULT_REGION should be the region of your S3 bucket.

Now you can call aws.s3 functions like bucketlist() and, assuming your credentials were correct, you should get a list of all your S3 buckets.

bucketlist()

# Bucket CreationDate

# 1 cheez-willikers 2020-04-19T01:52:38.000Z

Two errors I stumbled upon here were

- Forbidden (HTTP 403) because I inserted the wrong credentials for my user

- Forbidden (HTTP 403) because I incorrectly set up my user’s permission to access S3

Alternatively, you can pass your access key and secret access key as parameters to aws.s3 functions directly. For example, you can make calls like this.

bucketlist(key = "mykey", secret = "mysecretkey")

# Bucket CreationDate

# 1 cheez-willikers 2020-04-19T01:52:38.000Z

3. Read And Write Data From/To S3

Let’s start by uploading a couple CSV files to S3.

# Save iris and mtcars datasets to CSV files in tempdir()

write.csv(iris, file.path(tempdir(), "iris.csv"))

write.csv(mtcars, file.path(tempdir(), "mtcars.csv"))

Pro Tip tempdir() returns the location of a temporary directory that R creates every time you start a session (and destroys when you end that session).

# Upload files to S3 bucket

put_object(

file = file.path(tempdir(), "iris.csv"),

object = "iris.csv",

bucket = "cheez-willikers"

)

put_object(

file = file.path(tempdir(), "mtcars.csv"),

object = "mtcars.csv",

bucket = "cheez-willikers"

)

If you get an error like 301 Moved Permanently, it most likely means that something’s gone wrong with regards to your region. It could be that

- You’ve misspelled or inserted the wrong region name for the environment variable

AWS_DEFAULT_REGION(if you’re using environment vars) - You’ve misspelled or inserted the wrong region name for the

regionparameter ofput_object()(if you aren’t using environment vars) - You’ve incorrectly set up your user’s permissions

These files are pretty small, so uploading them is a breeze. If you’re trying to upload a big file (> 100MB), you may want to set multipart = TRUE within the put_object() function.

Now let’s list all the objects in our bucket using get_bucket().

get_bucket(bucket = "cheez-willikers")

# Bucket: cheez-willikers

#

# $Contents

# Key: iris.csv

# LastModified: 2020-04-20T14:25:57.000Z

# ETag: "885a41d063a7923ae8d0e8404d9291a3"

# Size (B): 4821

# Owner: ad135532a3647566705f5121f2f79783da0c0874af2e833eaf37f299420adee0

# Storage class: STANDARD

#

# $Contents

# Key: mtcars.csv

# LastModified: 2020-04-20T03:54:01.000Z

# ETag: "b6b77e00e1863bee2cd6dcfd05004ff2"

# Size (B): 71

# Owner: ad135532a3647566705f5121f2f79783da0c0874af2e833eaf37f299420adee0

# Storage class: STANDARD

This returns a list of s3_objects. I like using the rbindlist() function from the data.table package to collapse these objects into a nice and clean data.table (i.e. data.frame).

data.table::rbindlist(get_bucket(bucket = "cheez-willikers"))

# Key LastModified ETag Size

# 1: iris.csv 2020-04-20T14:25:57.000Z "885a41d063a7923ae8d0e8404d9291a3" 4821

# 2: mtcars.csv 2020-04-20T03:54:01.000Z "b6b77e00e1863bee2cd6dcfd05004ff2" 71

# Owner StorageClass Bucket

# 1: ad135532a3647566705f5121f2f79783da0c0874af2e833eaf37f299420adee0 STANDARD cheez-willikers

# 2: ad135532a3647566705f5121f2f79783da0c0874af2e833eaf37f299420adee0 STANDARD cheez-willikers

We can load one of these CSV files from S3 into R with the s3read_using() function. Here are three different ways to do this..

s3read_using(FUN = read.csv, bucket = "cheez-willikers", object = "iris.csv") # bucket and object specified separately

s3read_using(FUN = read.csv, object = "s3://cheez-willikers/iris.csv") # use the s3 URI

s3read_using(FUN = data.table::fread, object = "s3://cheez-willikers/iris.csv") # use data.table's fread() function for fast CSV reading

Note that the second and third examples use the object’s URI which is given as s3://<bucket-name>/<key>.

Alternatively, you could download these files with save_object() and then read them from disc.

tempfile <- tempfile() # temp filepath like /var/folders/vq/km5xms9179s_6vhpw5jxfrth0000gn/T//RtmpKgMGfZ/file4c6e2cfde13e

save_object(object = "s3://cheez-willikers/iris.csv", file = tempfile)

read.csv(tempfile)

GormAnalysis

GormAnalysis